If you’ve been in the cloud long enough, then you’re sure to have come across a scenario where you have to interact with data across accounts or organizations.

To do so, you have to set up a role in the other account that has a Trust relationship with YOUR main account. And once that ‘Trust’ is in place, you’ll need to assume that role in order to access the data.

To demonstrate how this works, we’ll use S3 as our example. What we want to be able to do is to retrieve a file from S3 in one account and upload it to S3 in another account.

Here’s today’s scenario in more detail:

Scenario Overview

Let’s say we have our main account which we’ll call Account M and a second account with an S3 bucket that we are trying to pull files from. We’ll call this Account S.

In our upcoming example, let’s imagine that in Account S there is a file in S3 that is updated daily. And from our main account, Account M, we want to pull that file every day and save it in our own S3 bucket. We’ll use a Lambda function to do this.

Example: Cross Account S3 Access Via A Lambda

First, let's create the Roles

In our secondary account, Account S, we need to create a Role that our Main account’s role can assume. This Role must:

- Trust our main account.

- Be able to pull the file from S3.

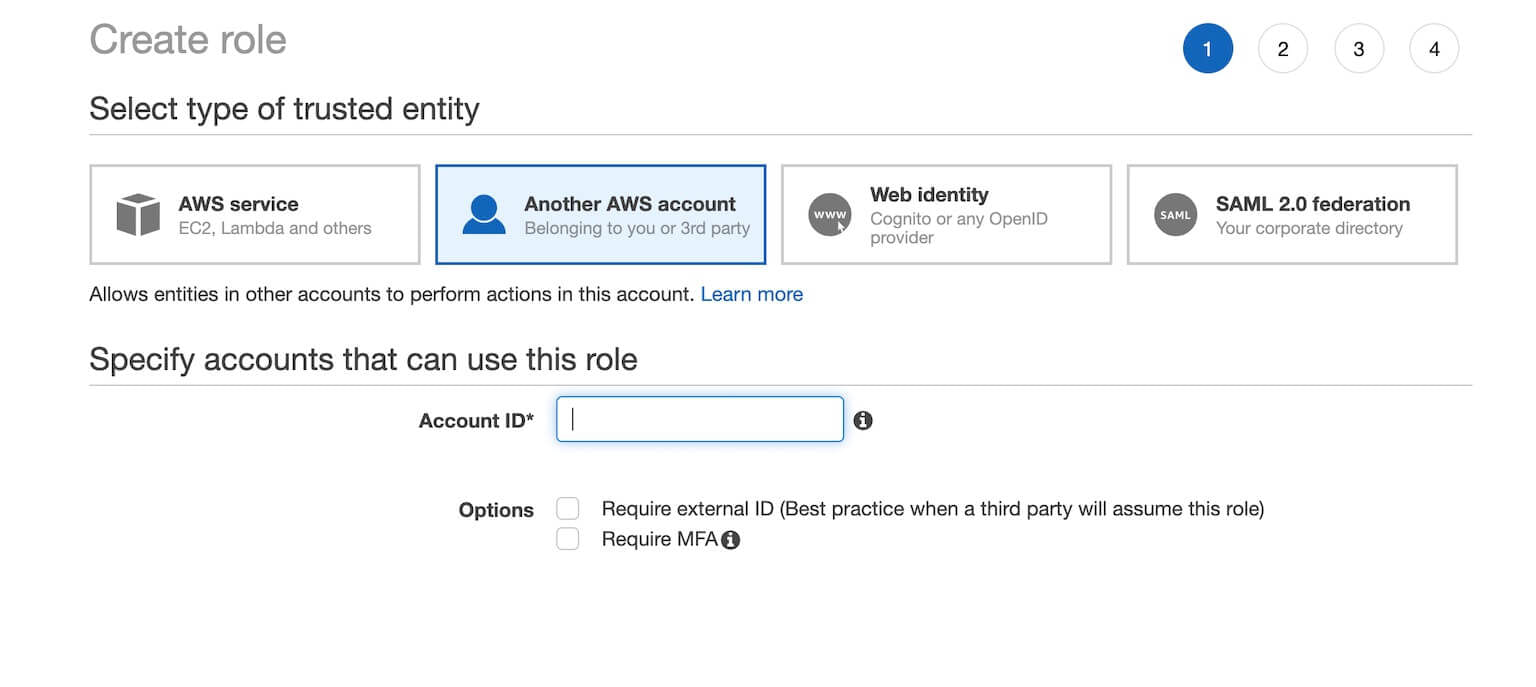

So in Account S, go to IAM and create new Role.

For your type of trusted entity, you want to select “Another AWS account” and enter the main account’s ID. This allows your main account, Account M, to assume this Role. It creates a Trust relationship between Account S and Account M.

For permissions, you’ll want to create a policy that allows this role to pull files from S3. This usually involves the getObject action but can include list bucket, list versions, key policies, etc depending on how your environment is set up. What I like to do is to just add the Administrator policy up front and once I get it working come back and prune it down to very specific permissions.

So now we have a role that Trusts our main account and can pull files from S3. Copy this role’s arn in your notepad as we’ll need to use it in our main account.

Now we must create a role in our main account that can assume that role in our second account. Since we are using a Lambda, let’s go ahead and set that up first.

Let's create the Lambda

First, create a new Lambda function in the main account using Python as the runtime and just the default execution role that it gives us.

Second, add the following code:

import json

import boto3

import os

from botocore.exceptions import ClientError

import os

def lambda_handler(event, context)

account_m_bucket = os.environ['MAIN_BUCKET']

account_s_bucket = os.environ['S_BUCKET']

account_s_filename = nameOfFile

account_s_id = os.environ['S_ID']

account_s_role_name = os.environ['S_ROLE_NAME']

sts_connection = boto3.client('sts')

subacct = sts_connection.assume_role(

RoleArn=f"arn:aws:iam::{account_s_id}:role/{account_s_role_name}",

RoleSessionName='S3FileTransfer'

)

ACCESS_KEY = subacct['Credentials']['AccessKeyId']

SECRET_KEY = subacct['Credentials']['SecretAccessKey']

SESSION_TOKEN = subacct['Credentials']['SessionToken']

s3 = boto3.client("s3")

s3_client = boto3.client(

's3',

aws_access_key_id=ACCESS_KEY,

aws_secret_access_key=SECRET_KEY,

aws_session_token=SESSION_TOKEN,

)

try:

response = s3_client.download_file(account_s_bucket, account_s_filename, f"/tmp/{account_s_filename}")

s3.upload_file(f"/tmp/{account_s_filename}", account_m_bucket, account_s_filename)

except ClientError as e:

print(e)

return False

return {

'statusCode': 200,

'body': json.dumps('Uploaded to s3')

}

A few things to note:

- Be sure to define the environment variables in your Lambda function. So for the main bucket name you’d add: MAIN_BUCKET=yourMainBucketsName to the lambda environment variables. This is in the Configuration tab > Environment Variables.

- Note that the s3_client variable assumes the role in our secondary account yet the s3 variable is for our main account. So we use s3_client to pull from the secondary account and s3 to upload to the main account.

- In this Lambda function, we are downloading the file from the secondary account and uploading it to the main account. You could also use the copy_object boto3 function to do this but it’s a bit more nuanced.

Let's adjust the Lambda role

Next, you’ll need to update the Lambda Role (you can find the Role in the Configuration Tab > Permissions) to:

- Be allowed to assume the role in Account S. We are using STS in the lambda function to assume the Role in Account S.

You’ll need the add the Assume Role action, and ARN of the role in Account S for the resource. This gives your Lambda permissions to assume that role. Something like:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": ["sts:AssumeRole"],

"Resource": ["arn:aws:iam::<account_s_id>:role/<account_s_role_name>"]

}

]

}- Upload the file to the main account s3 bucket. You’ll need to add the putObject action and bucket Resource to your role, but again each environment is different. Again, you may want to just add the Administrator policy and whittle it down after you get it working.

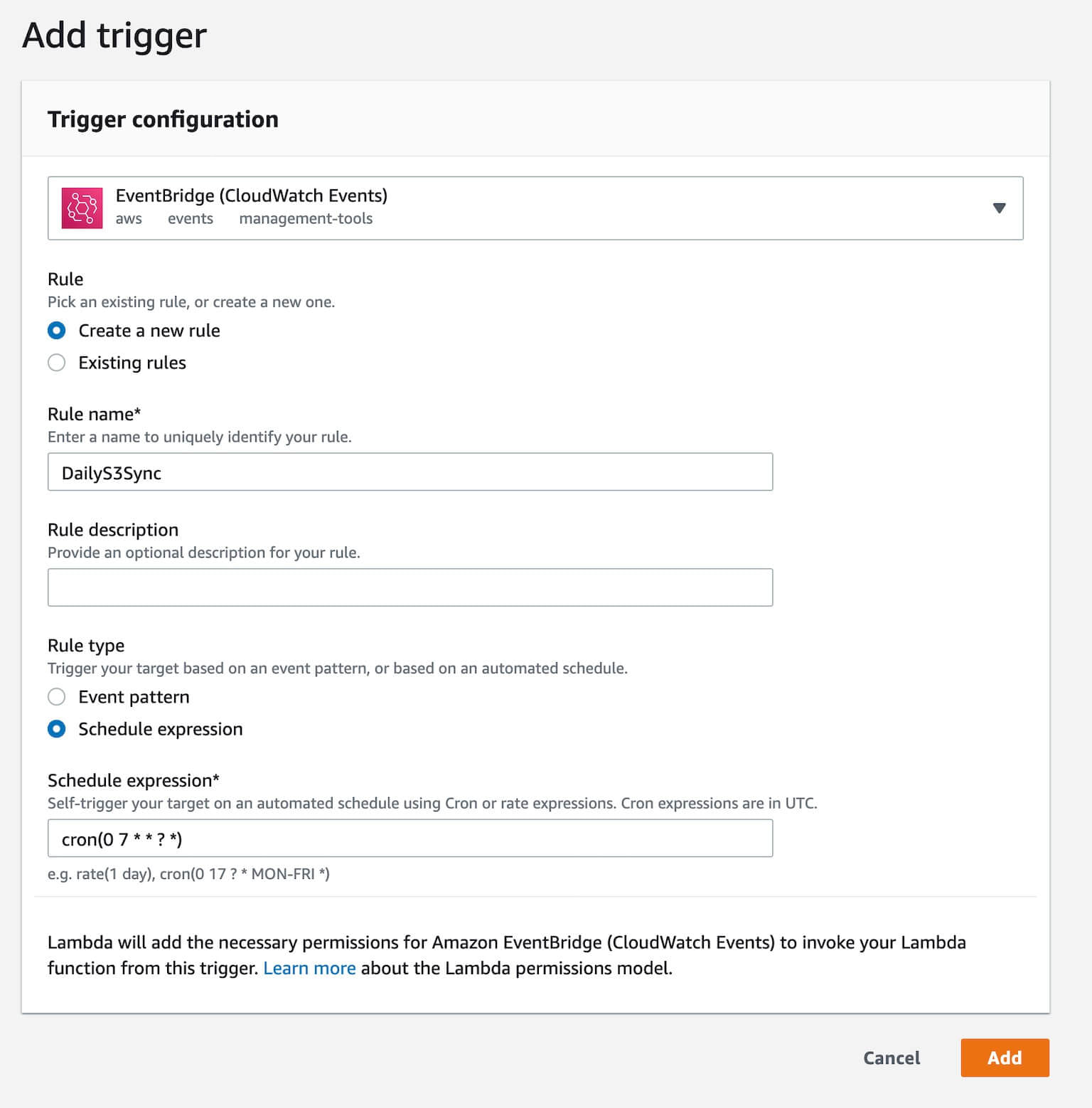

Adding a daily trigger to the Lambda

Finally, click on Add Trigger, choose Event Bridge, and create a new Rule that will trigger the Lambda function every day at 7 a.m.

Conclusion

So to summarize, in order to pull from an S3 bucket in a secondary account, you need to:

- Create a Role in the secondary account that “Trusts” your main account and also can pull files from the secondary S3 bucket.

- Create a Role in your main account that can assume that Role in the secondary account and also post that file to the main S3 bucket.

That’s it. Any questions?

How To Get AWS Certified in 2022: Exact Resources Used

**Resources Mentioned**

Udemy:

AWS Certified Solutions Architect: https://geni.us/faQZ

John Bonson Practice Exams: http://bit.ly/2Ngoo2w

WhizLabs - https://geni.us/HJRZB

This page may contain affiliate links. Please see my affiliate disclaimer for more info.